Saturday, July 25, 2020

AWS EC2 : Dockerize Java App & Deploy Container

https://youtu.be/ToqoJ8YAkRg

Dockerize app and deploy in EC2 instance

Push the docker image to Docker Hub public repository

Steps:

1. Setting up docker engine

sudo yum update -y

sudo amazon-linux-extras install docker

sudo service docker start

sudo usermod -a -G docker ec2-user

2. Dockerize your application

-- Dockerfile

FROM java:8

WORKDIR /

ADD myapp.jar myapp.jar

COPY application.properties application.properties

EXPOSE 5000

CMD java -jar myapp.jar -Dspring.config.location=application.properties

3. Building docker image of application:

docker build -t myapp .

4. Tagging image

docker tag myapp nirajtechi/myapp

5. Overriding port while launching docker container

docker run -p 8080:5000 nirajtechi/myapp

6. Push image to repository (Docker Hub)

docker push nirajtechi/myapp

7. Pull image from repository (Docker Hub)

docker pull nirajtechi/myapp

Java Microservices : Quarkus vs. Spring Boot

Nowadays, with the Microservice Architecture, perhaps it does not make sense anymore, nor any advantage, build something multi-platform (interpreted) for something that will always run on the same place and platform (the Docker Container — Linux environment). Portability is now less relevant (maybe more than ever), those extra level of abstraction is not important.

Having said that, let's check a simple and raw comparison between two alternatives to generate Microservices in Java: the very well-known Spring Boot and the not so very well-know (yet) Quarkus.

Thorntail Community Announcement on Quarkus

The team will continue contributing to SmallRye and Eclipse MicroProfile, while also shifting work towards Quarkus in the future . . .

Thorntail has announced end of life. Everything else on this site is now outdated. We recommend using Quarkus or WildFly.

Thorntail offers an innovative approach to packaging and running Java EE applications by packaging them with just enough of the server runtime to "java -jar" your application. It's MicroProfile compatible, too. And, it's all much, much cooler than that ...

Getting started

In order to help you start using the org.wildfly.plugins:wildfly-jar-maven-plugin Maven plugin, we have defined a set of examples that cover common use-cases.

To retrieve the examples:

git clone -b 2.0.0.Alpha4 http://github.com/wildfly-extras/wildfly-jar-maven-plugin

cd wildfly-jar-maven-plugin/examples

A good example to start with is the jaxrs example. To build and run the jaxrs example:

cd jaxrs

mvn package

java -jar target/jaxrs-wildfly.jar

The plugin documentation (currently an index.html file to download) can be found here. It contains a comprehensive list of the options you can use to fine tune the Maven build and create a bootable JAR.

Be sure to read the examples/README that contains required information to run the examples in an OpenShift context.

Saturday, July 18, 2020

Using Kubernetes to Deploy PostgreSQL

Config Maps :

===============================

File: postgres-configmap.yaml

---------------------------

apiVersion: v1

kind: ConfigMap

metadata:

name: postgres-config

labels:

app: postgres

data:

POSTGRES_DB: postgresdb

POSTGRES_USER: postgresadmin

POSTGRES_PASSWORD: admin123

Create Postgres config maps resource

---------------------------

$ kubectl create -f postgres-configmap.yaml

configmap "postgres-config" created

Persistent Storage Volume

===============================

File: postgres-storage.yaml

---------------------------

kind: PersistentVolume

apiVersion: v1

metadata:

name: postgres-pv-volume

labels:

type: local

app: postgres

spec:

storageClassName: manual

capacity:

storage: 5Gi

accessModes:

- ReadWriteMany

hostPath:

path: "/mnt/data"

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: postgres-pv-claim

labels:

app: postgres

spec:

storageClassName: manual

accessModes:

- ReadWriteMany

resources:

requests:

storage: 5Gi

Create storage related deployments

---------------------------

$ kubectl create -f postgres-storage.yaml

persistentvolume "postgres-pv-volume" created

persistentvolumeclaim "postgres-pv-claim" created

PostgreSQL Deployment

===============================

File: postgres-deployment.yaml

--------------------------------

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: postgres

spec:

replicas: 1

template:

metadata:

labels:

app: postgres

spec:

containers:

- name: postgres

image: postgres:10.4

imagePullPolicy: "IfNotPresent"

ports:

- containerPort: 5432

envFrom:

- configMapRef:

name: postgres-config

volumeMounts:

- mountPath: /var/lib/postgresql/data

name: postgredb

volumes:

- name: postgredb

persistentVolumeClaim:

claimName: postgres-pv-claim

Create Postgres deployment

--------------------------------

$ kubectl create -f postgres-deployment.yaml

deployment "postgres" created

PostgreSQL Service

===============================

File: postgres-service.yaml

--------------------------------

apiVersion: v1

kind: Service

metadata:

name: postgres

labels:

app: postgres

spec:

type: NodePort

ports:

- port: 5432

selector:

app: postgres

Create Postgres Service

$ kubectl create -f postgres-service.yaml

service "postgres" created

Connect to PostgreSQL

$ kubectl get svc postgres

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

postgres NodePort 10.107.71.253 <none> 5432:31070/TCP 5m

psql -h localhost -U postgresadmin1 --password -p 31070 postgresdb

Delete PostgreSQL Deployments

# kubectl delete service postgres

# kubectl delete deployment postgres

# kubectl delete configmap postgres-config

# kubectl delete persistentvolumeclaim postgres-pv-claim

# kubectl delete persistentvolume postgres-pv-volume

Other Kubernetes usefuL Links :

Running Galera Cluster on Kubernetes

https://severalnines.com/database-blog/running-galera-cluster-kubernetes

Example: Deploying WordPress and MySQL with Persistent Volumes

https://kubernetes.io/docs/tutorials/stateful-application/mysql-wordpress-persistent-volume/

MicroK8s, Part 3: How To Deploy a Pod in Kubernetes

https://virtualizationreview.com/articles/2019/02/01/microk8s-part-3-how-to-deploy-a-pod-in-kubernetes.aspx

Using Kubernetes to Deploy PostgreSQL

https://severalnines.com/database-blog/using-kubernetes-deploy-postgresql

Setting up PostgreSQL Database on Kubernetes

https://medium.com/@suyashmohan/setting-up-postgresql-database-on-kubernetes-24a2a192e962

Getting started with Docker and Kubernetes: a beginners guide

https://www.educative.io/blog/docker-kubernetes-beginners-guide

https://github.com/vfarcic/k8s-specs/blob/master/pod/db.yml

Sunday, July 12, 2020

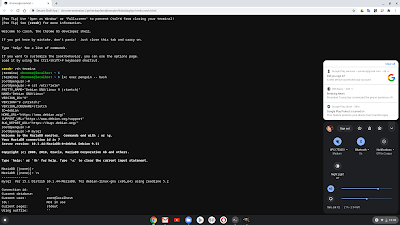

MariaDB in the penguin container of ChromeOS

I've got a Chromebook ; Installed MariaDB in penguin container :

apt install mysql-server

apt install mysql-workbench

systemctl status mariadb

https://discuss.linuxcontainers.org/t/using-lxd-on-your-chromebook/3823

https://www.maketecheasier.com/run-ubuntu-container-chrome-os/

Monday, July 6, 2020

How can Docker help a MariaDB cluster for Disaster/Recovery

https://blog.dbi-services.com/how-can-docker-help-a-mariadb-cluster-for-disaster-recovery/

This post is a work in progress ; On the above link is the original ideea ;

Mistakes or accidental data deletions can sometimes happen on a productive MariaDB Galera Cluster and this can be disastrous.

There are so many cases I have heard by customers and hereafter are some of the most common:

– dropping one column of a table

– dropping one table

– updating a big table without a where clause

What if it was possible to restore online a subset of data without downtime?

MariaDB preparation

We need some help from Docker ;

Using a delayed node (the helper), we can deploy a container with a delayed replication of 15minutes, but of course you can choose your own lags.

Using a delayed node (the helper), we can deploy a container with a delayed replication of 15minutes, but of course you can choose your own lags.

MariaDB > create user rpl_user@’192.168.56.%' IDENTIFIED BY 'manager';MariaDB > GRANT REPLICATION SLAVE ON *.* TO rpl_user@'%’;MariaDB > show grants for 'rpl_user'@'’192.168.56.%';+-------------------------------------------------------------------------------------+| Grants for rpl_user@% |+-------------------------------------------------------------------------------------+| GRANT REPLICATION SLAVE ON *.* TO 'rpl_user'@'’192.168.56.%' |+-------------------------------------------------------------------------------------+

$ vi /storage/mariadb-slave-15m/mariadb.conf.d/my.cnf[mysqld]server_id=10015binlog_format=ROWlog_bin=binloglog_slave_updates=1relay_log=relay-binexpire_logs_days=7read_only=ON

The most important parameter is “MASTER_DELAY” as it determines the amount of time in seconds the slave should lag behind the master.

CHANGE MASTER TO MASTER_HOST = '192.168.56.203’, \

MASTER_USER = 'rpl_user', MASTER_PASSWORD = 'manager’, \

MASTER_DELAY=900;

START SLAVEHow to use table partitioning to scale PostgreSQL

https://www.enterprisedb.com/postgres-tutorials/how-use-table-partitioning-scale-postgresql

Benefits of partitioning

- PostgreSQL declarative partitioning is highly flexible and provides good control to users. Users can create any level of partitioning based on need and can modify, use constraints, triggers, and indexes on each partition separately as well as on all partitions together.

- Query performance can be increased significantly compared to selecting from a single large table.

- Partition-wise-join and partition-wise-aggregate features increase complex query computation performance as well.

- Bulk loads and data deletion can be much faster, as based on user requirements these operations can be performed on individual partitions.

- Each partition can contain data based on its frequency of use and so can be stored on media that may be cheaper or slower for low-use data.

When to use partitioning

Most benefits of partitioning can be enjoyed when a single table is not able to provide them. So we can say that if a lot of data is going to be written on a single table at some point, users need partitioning. Apart from data, there may be other factors users should consider, like update frequency of the data, use of data over a time period, how small a range data can be divided, etc. With good planning and taking all factors into consideration, table partitioning can give a great performance boost and scale your PostgreSQL to larger datasets.

How to use partitioning

As of PostgreSQL12 release List, Range, Hash and combinations of these partition methods at different levels are supported.

Sunday, July 5, 2020

MySQL other commands

MySQL default port = 3306

MySQL USER

show grants for 'SUPER'@'%'

CREATE USER 'SUPER'@'%' IDENTIFIED BY 'toor'

GRANT SELECT ON *.* TO 'SUPER'@'127.0.0.1'

ALTER USER 'SUPER'@'%' PASSWORD EXPIRE

flush privileges;

mysql -u SUPER -P 3306 -h 127.0.0.1 -p toor

show grants for 'SUPER'@'%';

select @@port , @@hostname;

show global variables\G

select @@version , @@port , @@hostname , @@datadir , @@socket , @@log_error , @@pid_file , @@max_connections , @@log_bin , @@expire_logs_days;

show global variables like "datadir";

show global variables like "%port%";

SHOW GLOBAL VARIABLES LIKE '%bin%';

SHOW GLOBAL VARIABLES LIKE '%log%';

show slave status\G

show master status\G

select host,user,authentication_string from mysql.user where user like 'R%';

sfx=$(ps -efa | grep mysql | grep 'suffix')

if [ "x${sfx}" != "x" ]

then

instlist=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

else

instlist=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort)

fi

echo $instlist

for i in $instlist; do echo $i ; done

select * from information_schema.processlist where state like '%lock%';

su ansible -c "mysqladmin --login-path=labelPORT status processlist shutdown"

instlist="3366 3367 3368 3369";for INSTANCE in $instlist; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep Up ; done

NO COLOR

echo $PS1

export PS1="[\u@\h \d \@ \w]$ "

mysql --login-path=label${INSTANCE} -e 'SELECT table_schema "database", sum(data_length + index_length)/1024/1024/1024 "size in GB" FROM information_schema.TABLES table_schema; ';

instlist=$( ps -efa | grep 'mysqld ' | grep -o 'pid-file=.*' | awk '{print $1}' | cut -d'.' -f1 | rev | cut -c1-4 | rev | sort );

for INSTANCE in $instlist; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version" ; done

5.6=>

instlist56=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort )

for INSTANCE in $instlist56; do echo ${INSTANCE};/home/usr/bin/mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version"; done

instlist57=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

for INSTANCE in $instlist57; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version"; done

<=5.7

echo $instlist

sfx=$(ps -efa | grep mysql | grep 'suffix')

if [ "x${sfx}" != "x" ]

then

instlist=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

else

instlist=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort)

fi

echo $instlist

for INSTANCE in $instlist;

do

echo ${INSTANCE};

mysql --login-path=label${INSTANCE} -e 'SELECT table_schema "database", sum(data_length + index_length)/1024/1024/1024 "size in GB" FROM information_schema.TABLES table_schema ORDER by 2 DESC; ';

done

mysql_config_editor set --login-path=label3306 --host=127.0.0.1 --user=USER --port=3306 --password

mysql_config_editor print --all

mysql -u db_user -S/home/mysql/socket/my.sock -p

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%expire_logs_days%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%log%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "general_log_file"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "log_error"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%log_bin%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%relay_log%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%slow_query_log%"'

CLUSTER RHEL7 : sudo pcs status

sudo su - ansible + root on labelJUmper

mysql -h 127.0.0.1 -u USER -p -P port

mysql -u USER -P 3306 -h 127.0.0.1 -p

systemctl status mysqld@port

mysql --login-path=labelPORT

/opt/rh/mysql55/root/usr/bin/mysql -uroot -p pact -e 'SELECT * from table where field in ('3520','3535','3540') into OUTFILE 'autorisatie.csv' FIELDS TERMINATED BY ',';'

How To Copy a Folder

https://www.cyberciti.biz/faq/copy-folder-linux-command-line/

cp -var /home/source/folder /backup/copy/destination

Where,

-a : Preserve attributes such as file ownership, timestamps

-v : Verbose output.

-r : Copy directories recursively.

Use Linux rsync Command to copy a folder

rsync -av /path/to/source/ /path/to/destination/

To backup my home directory, which consists of large files and mail folders to /media/backup, enter:

$ rsync -avz /home/vivek /media/backup

copy a folder to remote machine called server1.cyberciti.biz as follows:

$ rsync -avz /home/vivek/ server1.cyberciti.biz:/home/backups/vivek/

Where,

-a : Archive mode i.e. copy a folder with all its permission and other information including recursive copy.

-v : Verbose mode.

-z : With this option, rsync compresses the file data as it is sent to the destination machine, which reduces the amount of data being transmitted something that is useful over a slow connection.

You can show progress during transfer using –progress or -P option:

$ rsync -av --progress /path/to/source/ /path/to/dest

MySQL USER

show grants for 'SUPER'@'%'

CREATE USER 'SUPER'@'%' IDENTIFIED BY 'toor'

GRANT SELECT ON *.* TO 'SUPER'@'127.0.0.1'

ALTER USER 'SUPER'@'%' PASSWORD EXPIRE

flush privileges;

GRANT ALL PRIVILEGES ON *.* TO 'SUPER'@'%' WITH GRANT OPTION;

mysql -u SUPER -P 3306 -h 127.0.0.1 -p toor

show grants for 'SUPER'@'%';

select @@port , @@hostname;

show global variables\G

select @@version , @@port , @@hostname , @@datadir , @@socket , @@log_error , @@pid_file , @@max_connections , @@log_bin , @@expire_logs_days;

show global variables like "datadir";

show global variables like "%port%";

SHOW GLOBAL VARIABLES LIKE '%bin%';

SHOW GLOBAL VARIABLES LIKE '%log%';

show slave status\G

show master status\G

select host,user,authentication_string from mysql.user where user like 'R%';

sfx=$(ps -efa | grep mysql | grep 'suffix')

if [ "x${sfx}" != "x" ]

then

instlist=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

else

instlist=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort)

fi

echo $instlist

for i in $instlist; do echo $i ; done

select * from information_schema.processlist where state like '%lock%';

su ansible -c "mysqladmin --login-path=labelPORT status processlist shutdown"

instlist="3366 3367 3368 3369";for INSTANCE in $instlist; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep Up ; done

NO COLOR

echo $PS1

export PS1="[\u@\h \d \@ \w]$ "

mysql --login-path=label${INSTANCE} -e 'SELECT table_schema "database", sum(data_length + index_length)/1024/1024/1024 "size in GB" FROM information_schema.TABLES table_schema; ';

instlist=$( ps -efa | grep 'mysqld ' | grep -o 'pid-file=.*' | awk '{print $1}' | cut -d'.' -f1 | rev | cut -c1-4 | rev | sort );

for INSTANCE in $instlist; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version" ; done

5.6=>

instlist56=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort )

for INSTANCE in $instlist56; do echo ${INSTANCE};/home/usr/bin/mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version"; done

instlist57=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

for INSTANCE in $instlist57; do echo ${INSTANCE};mysql --login-path=label${INSTANCE} -e "\s" | grep "Server version"; done

<=5.7

echo $instlist

sfx=$(ps -efa | grep mysql | grep 'suffix')

if [ "x${sfx}" != "x" ]

then

instlist=$(ps -ef | grep 'mysqld '| grep -o '@.*' | cut -c2- | awk '{print $1}' | sort );

else

instlist=$(ps -efa | grep 'mysqld ' | grep -o 'port=.*' | cut -c6- | sort)

fi

echo $instlist

for INSTANCE in $instlist;

do

echo ${INSTANCE};

mysql --login-path=label${INSTANCE} -e 'SELECT table_schema "database", sum(data_length + index_length)/1024/1024/1024 "size in GB" FROM information_schema.TABLES table_schema ORDER by 2 DESC; ';

done

mysql_config_editor set --login-path=label3306 --host=127.0.0.1 --user=USER --port=3306 --password

mysql_config_editor print --all

mysql -u db_user -S/home/mysql/socket/my.sock -p

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%expire_logs_days%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%log%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "general_log_file"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "log_error"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%log_bin%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%relay_log%"'

/home/usr/bin/mysql --login-path=label3306 -e 'show global variables like "%slow_query_log%"'

CLUSTER RHEL7 : sudo pcs status

sudo su - ansible + root on labelJUmper

mysql -h 127.0.0.1 -u USER -p -P port

mysql -u USER -P 3306 -h 127.0.0.1 -p

systemctl status mysqld@port

mysql --login-path=labelPORT

/opt/rh/mysql55/root/usr/bin/mysql -uroot -p pact -e 'SELECT * from table where field in ('3520','3535','3540') into OUTFILE 'autorisatie.csv' FIELDS TERMINATED BY ',';'

How To Copy a Folder

https://www.cyberciti.biz/faq/copy-folder-linux-command-line/

cp -var /home/source/folder /backup/copy/destination

Where,

-a : Preserve attributes such as file ownership, timestamps

-v : Verbose output.

-r : Copy directories recursively.

Use Linux rsync Command to copy a folder

rsync -av /path/to/source/ /path/to/destination/

To backup my home directory, which consists of large files and mail folders to /media/backup, enter:

$ rsync -avz /home/vivek /media/backup

copy a folder to remote machine called server1.cyberciti.biz as follows:

$ rsync -avz /home/vivek/ server1.cyberciti.biz:/home/backups/vivek/

Where,

-a : Archive mode i.e. copy a folder with all its permission and other information including recursive copy.

-v : Verbose mode.

-z : With this option, rsync compresses the file data as it is sent to the destination machine, which reduces the amount of data being transmitted something that is useful over a slow connection.

You can show progress during transfer using –progress or -P option:

$ rsync -av --progress /path/to/source/ /path/to/dest

Subscribe to:

Posts (Atom)